Decentralized GPU: The Future AI Stack Explained Part One

Kevin Dwyer

May 2, 2024

9 min read

e

AI has been (loudly) shaking up industries from healthcare to Web3 since ChatGPT presented the instantly usable application of generative AI to the mainstream. The industry’s potential now seems limitless, with the vast majority of companies considering it one of their top priorities and an annual industry growth rate of close to 40%. By 2027, AI business is projected to reach a valuation exceeding $400 billion.

However, scaling AI capabilities to meet the insatiable demand presents a significant challenge: the ever-present bottleneck of GPUs (Graphics Processing Units). Global chip shortages and intricate GPU manufacturing have created a bottleneck, leaving researchers and businesses restricted in their quest to scale and innovate in the realm of AI.

Decentralized GPUs present an alternative for small and medium AI companies struggling to source affordable resources. By providing access to a decentralized marketplace of GPUs, decentralized GPUs can be efficiently sourced from a variety of providers, creating a new alternative for AI startups.

GPU Is Critical In AI Model Creation & Execution

GPUs are crucial during model training and inference. Their parallel processing power accelerates the massive computations needed to train complex AI models, significantly reducing training time. While GPUs can also be used for fine-tuning, which involves retraining parts of the model, they aren't always necessary for deployment and inference where the focus is on efficient model execution.

-

Data Preprocessing: Raw data gets scrubbed, shaped, and enriched to feed the AI model (think: organizing messy notes for studying).

-

Model Training (GPU intensive): The AI model learns from the data through complex algorithms, like a student absorbing information (think: studying with a guide).

-

Validation: We test the model on unseen data to see if it can apply its learnings in the real world (think: taking a final exam).

-

Fine-Tuning: If the model struggles, we adjust its settings or even change its architecture (think: getting extra tutoring or switching classes).

-

Deployment & Inference: The fine-tuned model goes live, making predictions on new data (think: graduating and putting your knowledge to work).

AI Components In a Nutshell

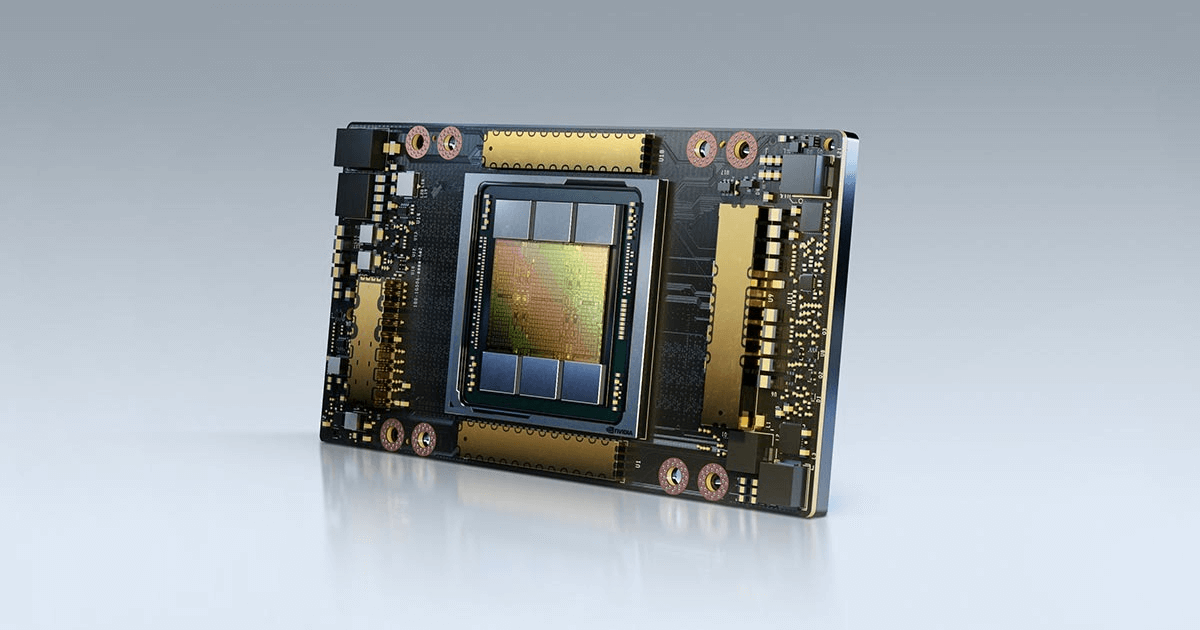

NVIDIA’s Powerful A100 Tensor Core GPU

NVIDIA’s Powerful A100 Tensor Core GPU

Artificial intelligence (AI) development requires a combination of hardware, software, data storage, and more resources for all builders.

Developers will need a variety of components to build even basic AI, including:

-

GPU: GPUs are essential for significantly accelerating the training and inference for tasks involving large datasets or complex models.

-

AI Library or Open-Source AI Model: These libraries provide pre-built tools and functions for various machine learning tasks, simplifying development. Some common sources are TensorFlow, Scikit-learn, Keras, and PyTorch.

-

Data Storage: You'll need storage space for your data (the information the AI will learn from). This could even be on your computer's hard drive or an external storage device for small ops.

-

Development Environment: An Integrated Development Environment (IDE) like PyCharm or RStudio can provide code completion, debugging tools, and other features to streamline the development process.

-

Programming Platform: Programming platforms like CUDA can speed development with NVIDIA GPUs for AI computing tasks. By allowing developers to write code that utilizes a GPU's parallel processing capabilities, CUDA significantly accelerates AI training and inference.

From PC Gaming To AI Development: Why GPUs Are Essential

“The Cursed Aesthetic Of Old Graphics Card Boxes” Source: TheGamer

“The Cursed Aesthetic Of Old Graphics Card Boxes” Source: TheGamer

Originally the hero of high-res gaming from the 2000s to the present, Graphics Processing Units (GPUs) are now the muscle behind artificial intelligence. Their ability to crunch massive datasets in parallel, thanks to thousands of cores, makes them perfect for accelerating AI training and tasks. Let's explore why GPUs outperform CPUs in the world of AI.

-

Parallel Processing Power: GPUs boast thousands of cores compared to a CPU's handful. This allows them to churn through massive datasets and complex AI computations much faster.

-

Floating Point Operations: FLOPs measure the number of calculations involving decimals that a computer performs. In AI, FLOPs are a crucial metric as complex AI models rely heavily on these calculations for tasks like matrix multiplication during training and inference. The more FLOPs a system can handle, the faster it can train and process information.

-

Memory Bandwidth: GPUs have significantly higher memory bandwidth than CPUs. This is essential for AI tasks that require frequent data access during processing, minimizing bottlenecks and speeding up computations.

-

Specialized Hardware: Many high-end GPUs feature Tensor Cores, hardware specifically designed to accelerate AI workloads and deep learning processes. These cores significantly improve performance for AI-specific tasks.

What is GPU Infrastructure?

As previously mentioned, GPUs (Graphics Processing Units) are specialized processors designed for handling complex graphical computations. Compared to CPUs (Central Processing Units), GPUs excel at parallel processing, making them ideal for tasks like:

- Decentralized GPU rendering – Used in applications like 3D animation and video editing, where rendering large datasets requires significant processing power.

- Machine learning and AI – Training complex algorithms often involves processing massive amounts of data, a task perfectly suited for GPUs.

- Cryptocurrency mining—Some cryptocurrencies leverage Proof-of-Work (PoW) consensus mechanisms, which required significant GPU power for mining operations, although in many cases, GPUs have been replaced with purpose-built ASIC miners.

GPU infrastructure comprises the foundational hardware elements that make running and managing systems that rely on GPU power (like AI) possible. Traditionally, accessing powerful GPU infrastructure meant relying on centralized cloud providers. However, decentralized GPU networks offer a compelling alternative.

Learn more about the Top 5 GPUs for AI

Options for Sourcing GPU Resources: What is Best for You?

When beginning AI projects, the question of GPU resources is paramount. Several options exist to secure the processing power needed to train and run complex models, each catering to different needs:

Building Compute Infrastructure In-House

For organizations with significant upfront capital and the technical expertise to manage their own infrastructure, building an in-house solution offers the ultimate granular control over hardware and software. This approach allows for complete customization but requires a dedicated team to handle maintenance and upgrades.

Using A Large Cloud Service To Power AI

Large cloud service providers like Google Cloud Platform, Amazon Web Services, and Microsoft Azure offer a vast pool of GPUs accessible on-demand. This flexibility is ideal for businesses of all sizes that require scalability and don't mind fluctuating costs and hefty price tags based on usage.

Using Specialized Cloud Services To Power AI

For a more specialized approach, services like Coreweave and Lambda cater specifically to AI workloads. These services offer optimized infrastructure, potentially leading to more cost-effective solutions compared to general-purpose cloud offerings. This option strikes a balance between affordability and performance for businesses focused on AI development.

A New Alternative: Using Decentralized GPU Marketplaces

Dec GPU Explaineds gives developers open and scalable access to plentiful GPU resources in a way that is more cost-efficient, transparent, and secure. This ecosystem democratizes access to computational power, bridging the gap between GPU providers and developers.c

Decentralized GPU Explained

Decentralized GPU providers offer access to computing power from a network of individual servers and providers rather than from their own data centers. This network utilizes underutilized GPUs from various sources around the world, creating a system similar to peer-to-peer file sharing.

The benefits of using Decentralized GPU marketplace:

-

Cost Efficiency: Decentralized platforms tap into underutilized resources, offering access to powerful GPUs at a fraction of the cost of traditional cloud services. This can be a game-changer for startups and individual researchers with limited budgets.

-

Democratized AI: By making powerful GPUs more affordable and accessible, decentralized networks level the playing field. This allows smaller players to compete with larger companies that have traditionally dominated the AI space due to their access to expensive computing resources.

-

Transparency and Security: Decentralized GPU providers offer greater transparency in how resources are allocated and used, while also potentially enhancing security compared to some traditional cloud environments.

What is a Decentralized GPU Network?

Imagine a vast network of computers where anyone can contribute their unused processing power – specifically, the power of their graphics processing units (GPUs). This distributed system, facilitated by blockchain technology, is the essence of a decentralized GPU network. Unlike traditional cloud computing services controlled by centralized entities, decentralized GPU networks empower individuals and entities to share and monetize their idle GPU resources.

Decentralized GPU providers offer access to computing power from a network of individual servers and providers rather than from our own data centers. This network utilizes underutilized GPUs from various sources around the world, creating a system similar to peer-to-peer file sharing.

Decentralized GPU marketplaces give developers open and scalable access to plentiful GPU resources in a way that is more cost-efficient, transparent, and secure. This ecosystem democratizes access to computational power, bridging the gap between GPU providers and developers.

Why Are Decentralized GPUs Necessary?

The demand for powerful GPU processing is skyrocketing, driven by advancements in AI, machine learning, and blockchain technology. However, centralized cloud providers often come with limitations:

- High Costs: Renting GPU power from cloud providers can be expensive, especially for resource-intensive tasks.

- Limited Availability: During peak demand, accessing cloud-based GPUs can be challenging due to limited resources.

- Centralization Risks: Reliance on a single provider introduces potential security vulnerabilities and control issues.

Decentralized GPU networks offer a solution by:

- Democratizing Access: Anyone with a spare GPU can contribute to the network, increasing overall processing power and accessibility.

- Lowering Costs: The competitive nature of decentralized networks can lead to lower costs for users seeking GPU resources.

- Enhancing Security: Blockchain technology ensures transparency and security in resource allocation and transactions.

How Does Decentralized GPU Infrastructure Support Artificial Intelligence (AI)?

The field of AI heavily relies on GPUs for training complex algorithms. Decentralized GPU networks can revolutionize AI development by:

- Reducing Costs: Researchers and developers can access affordable decentralized GPU resources for training AI models.

- Increased Speed: The distributed nature of the network can significantly accelerate AI training processes.

- Collaboration Opportunities: Decentralized GPU networks can foster collaboration by enabling researchers to share and access computational resources.

Democratized GPU Explained

Decentralized GPU providers offer access to computing power from a network of individual servers and providers rather than from their own data centers. This network utilizes underutilized GPUs from various sources around the world, creating a system similar to peer-to-peer file sharing.

Final Thoughts On GPU

AI's skyrocketing demand exposes a critical bottleneck: GPUs. While Decentralized GPU marketplaces offer a solution, the future lies in advancing GPUs and AI computation itself.

Promising avenues include:

- AI-Optimized Hardware: Specialized chips outperform GPUs in specific tasks, boosting efficiency and cutting training times.

- Neuromorphic Computing: Inspired by the brain, this approach mimics its structure to revolutionize AI learning and processing.

- Efficient Algorithms: Researchers are constantly developing algorithms requiring less computational power, making AI development more accessible.

By combining these advancements, we can break free from the current limitations slowing development while democratizing and accelerating AI building for a new era of transformative applications.

Join the Conversation on Ankr’s Channels!

Twitter | Telegram Announcements | Telegram English Chat | Help Desk | Discord | YouTube | LinkedIn | Instagram | Ankr Staking